Thursday, November 30, 2017

Exclusive: How NVIDIA Research is Reinventing the Display Pipeline for the Future of VR, Part 2

In Part 1 of this article we explored the current state of CGI, game, and contemporary VR systems. Here in part two we we look at the limits of human visual perception and show several of the methods we’re exploring to drive performance closer to them in VR systems of the future.

Guest Article by Dr. Morgan McGuire

Dr. Morgan McGuire is a scientist on the new experiences in AR and VR research team at NVIDIA. He’s contributed to the Skylanders, Call of Duty, Marvel Ultimate Alliance, and Titan Quest game series published by Activision and THQ. Morgan is the coauthor of The Graphics Codex and Computer Graphics: Principles & Practice. He holds faculty positions at the University of Waterloo and Williams College.

Note: Part 1 of this article provides important context for this discussion, consider reading it before proceeding.

Reinventing the Pipeline for the Future of VR

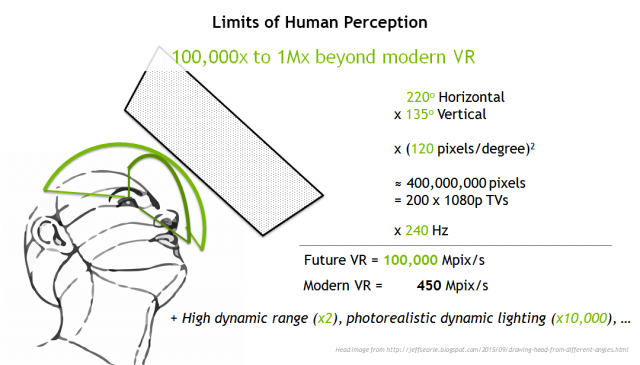

We derive our future VR specifications from the limits of human perception. There are different ways to measure these, but to make the perfect display you’d need roughly the equivalent to 200 HDTVs updating at 240 Hz. This equates to about 100,000 megapixels per second of graphics throughput.

Recall that modern VR is around 450 Mpix/sec today. This means we need a 200x increase in performance for future VR. But with factors like high dynamic range, variable focus, and current film standards for visual quality and lighting in play, the more realistic need is a 10,000x improvement… and we want this with only 1ms of latency.

Recall that modern VR is around 450 Mpix/sec today. This means we need a 200x increase in performance for future VR. But with factors like high dynamic range, variable focus, and current film standards for visual quality and lighting in play, the more realistic need is a 10,000x improvement… and we want this with only 1ms of latency.

We could theoretically accomplish this by committing increasingly greater computing power, but brute force simply isn’t efficient or economical. Brute force won’t get us to pervasive use of VR. So, what techniques can we use to get there?

Rendering Algorithms

Foveated Rendering

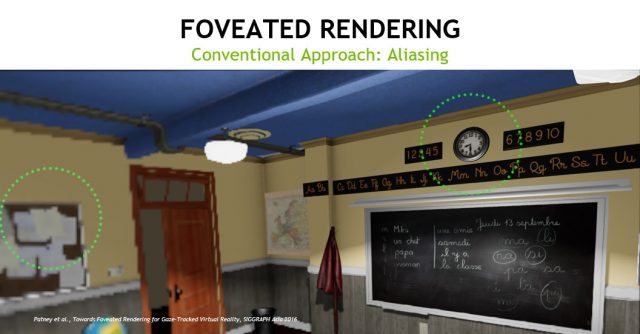

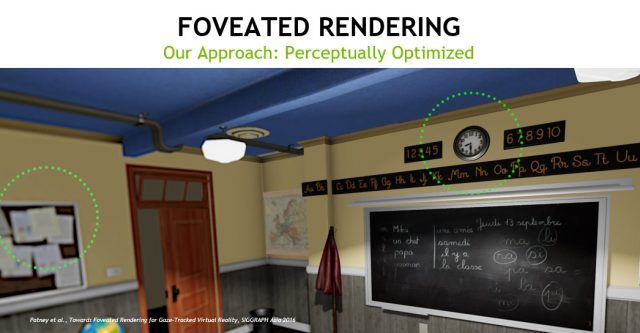

Our first approach to performance is the foveated rendering technique—which reduces the quality of images in a user’s peripheral vision—takes advantage of an aspect of human perception to generate an increase in performance without a perceptible loss in quality.

Because the eye itself only has high resolution right where you’re looking, in the fovea centralis region, a VR system can undetectably drop the resolution of peripheral pixels for a performance boost. It can’t just render at low resolution, though. The above images are wide field of view pictures shrunk down for display here in 2D. If you looked at the clock in VR, then the bulletin board on the left would be in the periphery. Just dropping resolution as in the top image produces blocky graphics and a change in visual contrast. This is detectable as motion or blurring in the corner of your eye. Our goal is to compute the exact enhancement needed to produce a low-resolution image whose blurring matches human perception and appears perfect in peripheral vision (Patney, et al. and Sun et al.)

Because the eye itself only has high resolution right where you’re looking, in the fovea centralis region, a VR system can undetectably drop the resolution of peripheral pixels for a performance boost. It can’t just render at low resolution, though. The above images are wide field of view pictures shrunk down for display here in 2D. If you looked at the clock in VR, then the bulletin board on the left would be in the periphery. Just dropping resolution as in the top image produces blocky graphics and a change in visual contrast. This is detectable as motion or blurring in the corner of your eye. Our goal is to compute the exact enhancement needed to produce a low-resolution image whose blurring matches human perception and appears perfect in peripheral vision (Patney, et al. and Sun et al.)

Light Fields

To speed up realistic graphics for VR, we’re looking at rendering primitives beyond just today’s triangle meshes. In this collaboration with McGill and Stanford we’re using light fields to accelerate the lighting computations. Unlike today’s 2D light maps that paint lighting onto surfaces, these are a 4D data structure that stores the lighting in space at all possible directions and angles.

They produce realistic reflections and shading on all surfaces in the scene and even dynamic characters. This is the next step of unifying the quality of ray tracing with the performance of environment probes and light maps.

They produce realistic reflections and shading on all surfaces in the scene and even dynamic characters. This is the next step of unifying the quality of ray tracing with the performance of environment probes and light maps.

Real-time Ray Tracing

What about true run-time ray tracing? The NVIDIA Volta GPU is the fastest ray tracing processor in the world, and its NVIDIA Pascal GPU siblings are the fastest consumer ones. At about 1 billion rays/second, Pascal is just about fast enough to replace the primary rasterizer or shadow maps for modern VR. If we unlock the pipeline with the kinds of changes I’ve just described, what can ray tracing do for future VR?

The answer is: ray tracing can do a lot for VR. When you’re tracing rays, you don’t need shadow maps at all, thereby eliminating a latency barrier Ray tracing can also natively render red, green, and blue separately, and directly render barrel-distorted images for the lens. So, it avoids the need for the lens warp processing and the subsequent latency.

In fact, when ray tracing, you can completely eliminate the latency of rendering discrete frames of pixels so that there is no ‘frame rate’ in the classic sense. We can send each pixel directly to the display as soon as it is produced on the GPU. This is called ‘beam racing’ and eliminates the display synchronization. At that point, there are zero high-latency barriers within the graphics system.

Because there’s no flat projection plane as in rasterization, ray tracing also solves the field of view problem. Rasterization depends on preserving straight lines (such as the edges of triangles) from 3D to 2D. But the wide field of view needed for VR requires a fisheye projection from 3D to 2D that curves triangles around the display. Rasterizers break the image up into multiple planes to approximate this. With ray tracing, you can directly render even a full 360 degree field of view to a spherical screen if you want. Ray tracing also natively supports mixed primitives: triangles, light fields, points, voxels, and even text, allowing for greater flexibility when it comes to content optimization. We’re investigating ways to make all of those faster than traditional rendering for VR.

In addition to all of the ways that ray tracing can accelerate VR rendering latency and throughput, a huge feature of ray tracing is what it can do for image quality. Recall from the beginning of this article that the image quality of film rendering is due to an algorithm called path tracing, which is an extension of ray tracing. If we switch to a ray-based renderer, we unlock a new level of image quality for VR.

Real-time Path Tracing

Although we can now ray trace in real time, there’s a big challenge for real-time path tracing. Path tracing is about 10,000x more computationally intensive than ray tracing. That’s why movies takes minutes per frame to generate instead of milliseconds.

Under path tracing, the system first traces a ray from the camera to find the visible surface. It then casts another ray to the sun to see if that surface is in shadow. But, there’s more illumination in a scene than directly from the sun. Some light is indirect, having bounced off the ground or another surface. So, the path tracer then recursively casts another ray at random to sample the indirect lighting. That point also requires a shadow ray cast, and its own random indirect light…the process continues until it has traced about about 10 rays for each single path.

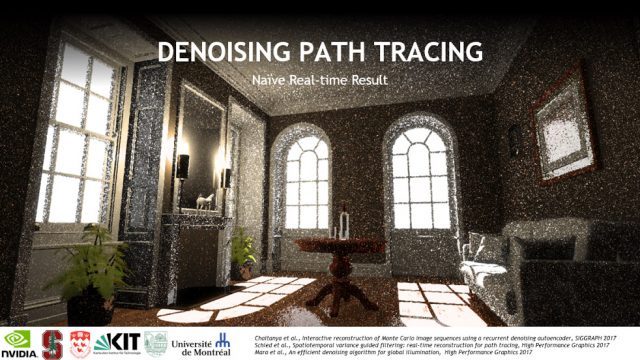

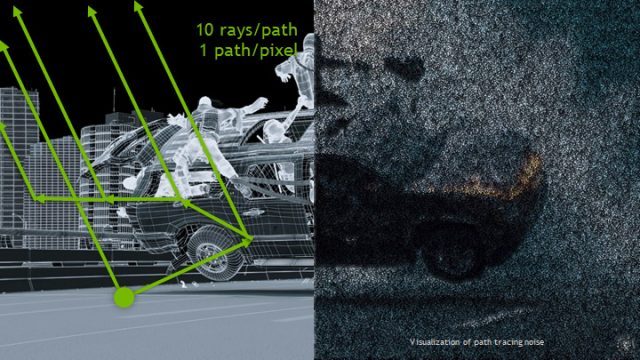

But if there’s only one or two paths at a pixel, the image is very noisy because of the random sampling process. It looks like this:

Film graphics solves this problem by tracing thousands of paths at each pixel. All of those paths at ten rays each are why path tracing is a net 10,000x more expensive than ray tracing alone.

Film graphics solves this problem by tracing thousands of paths at each pixel. All of those paths at ten rays each are why path tracing is a net 10,000x more expensive than ray tracing alone.

To unlock path tracing image quality for VR, we need a way to sample only a few paths per pixel and still avoid the noise from random sampling. We think we can get there soon thanks to innovations like foveated rendering, which makes it possible to only pay for expensive paths in the center of the image, and denoising, which turns the grainy images directly into clear ones without tracing more rays.

We released three research papers this year towards solving the denoising problem. These are the result of collaborations with McGill University, the University of Montreal, Dartmouth College, Williams college, Stanford University, and the Karlsruhe Institute of Technology. These methods can turn a noisy, real-time path traced image like this:

Using only milliseconds of computation and no additional rays. Two of the methods use the image processing power of the GPU to achieve this. One uses the new AI processing power of NVIDIA GPUs. We trained a neural network for days on denoising, and it can now denoise images on its own in tens of milliseconds. We’re increasing the sophistication of that technique and training it more to bring the cost down. This is an exciting approach because it is one of several new methods we’ve discovered recently for using artificial intelligence in unexpected ways to enhance both the quality of computer graphics and the authoring process for creating new, animated 3D content to populate virtual worlds.

Using only milliseconds of computation and no additional rays. Two of the methods use the image processing power of the GPU to achieve this. One uses the new AI processing power of NVIDIA GPUs. We trained a neural network for days on denoising, and it can now denoise images on its own in tens of milliseconds. We’re increasing the sophistication of that technique and training it more to bring the cost down. This is an exciting approach because it is one of several new methods we’ve discovered recently for using artificial intelligence in unexpected ways to enhance both the quality of computer graphics and the authoring process for creating new, animated 3D content to populate virtual worlds.

Computational Displays

The displays in today’s VR headsets are relatively simple output devices. The display itself does hardly any processing, it simply shows the data that is handed to it. And while that’s fine for things like TVs, monitors, and smartphones, there’s huge potential for improving the VR experience by making displays ‘smarter’ about not only what is being displayed but also the state of the observer. We’re exploring several methods of on-headset and even in-display processing to push the limits of VR.

Solving Vergence-Accommodation Disconnect

The first challenge for a VR display is the focus problem, which is technically called the ‘vergence-accommodation disconnect’. All of today’s VR and AR devices force you to focus about 1.5m away. That has two drawbacks:

- When you’re looking at a very distant or close up object in stereo VR, the point where your two eyes converge doesn’t match the point where they are focused (‘accommodated’). That disconnect creates discomfort and is one of the common complaints with modern VR.

- If you’re using augmented reality, then you are looking at points in the real world at real depths. The virtual imagery needs to match where you’re focusing or it will be too blurry to use. For example, you can’t read augmented map directions at 1.5m while you’re looking 20m into the distance while driving.

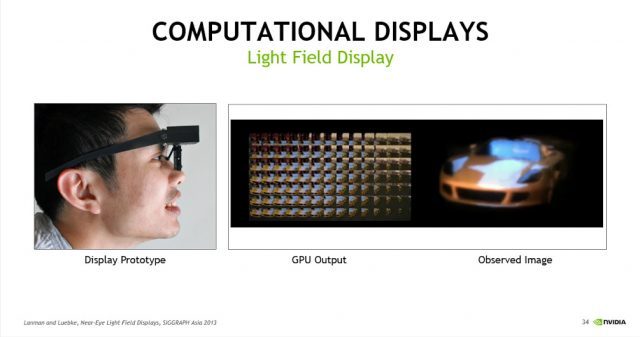

We created a prototype computational light field display allows you to focus at any depth by presenting light from multiple angles. This display represents an important break with the past because computation is occurring directly in the display. We’re not sending mere images: we’re sending complex data that the display converts into the right form for your eye. Those tiny grids of images that look a bit like a bug’s view of the world have to be specially rendered for the display, which incorporates custom optics—a microlens array—to present them in the right way so that they look like the natural world.

We created a prototype computational light field display allows you to focus at any depth by presenting light from multiple angles. This display represents an important break with the past because computation is occurring directly in the display. We’re not sending mere images: we’re sending complex data that the display converts into the right form for your eye. Those tiny grids of images that look a bit like a bug’s view of the world have to be specially rendered for the display, which incorporates custom optics—a microlens array—to present them in the right way so that they look like the natural world.

That first light field display was from 2013. Next week, at the ACM SIGGRAPH Asia 2018 conference, we’re presenting a new holographic display that uses lasers and intensive computation to create light fields out of interfering wavefronts of light. It is harder to visualize the workings here, but relies on the same underlying principles and can produce even better imagery.

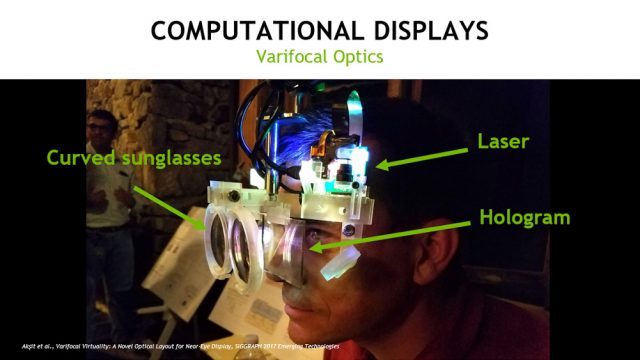

We strongly believe that this kind of in-display computation is a key technology for the future. But light fields aren’t the only approach that we’ve taken for using computation to solve the focus problem. We’ve also created two forms of variable-focus, or ‘varifocal’ optics.

This display prototype projects the image using a laser onto a diffusing hologram. You look straight through the hologram and see its image as if it was in the distance when it reflects off a curved piece of glass:

We control the distance at which the image appears by moving either the hologram or the sunglass reflectors with tiny motors. We match the virtual object distance to the distance that you’re looking in the real world, so you can always focus perfectly naturally.

We control the distance at which the image appears by moving either the hologram or the sunglass reflectors with tiny motors. We match the virtual object distance to the distance that you’re looking in the real world, so you can always focus perfectly naturally.

This approach requires two pieces of computation in the display: one tracks the user’s eye and the other computes the correct optics in order to render a dynamically pre-distorted image. As with most of our prototypes, the research version is much larger than what would become an eventual product. We use large components to facilitate research construction. These displays would look more like sunglasses when actually refined for real use.

Here’s another varifocal prototype, this one created in collaboration with researchers at the University of North Carolina, the Max Planck Institute, and Saarland University. This is a flexible lens membrane. We use computer-controlled pneumatics to bend the lens as you change your focus so that it is always correct.

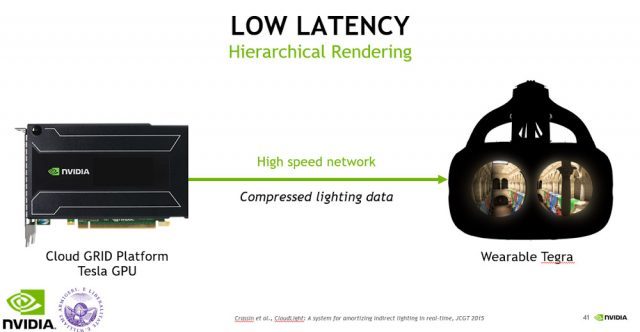

Hybrid Cloud Rendering

We have a variety of new approaches for solving the VR latency challenge. One of them, in collaboration with Williams College, leverages the full spread of GPU technology. To reduce the delay in rendering, we want to move the GPU as close as possible to the display. Using a Tegra mobile GPU, we can even put the GPU right on your body. But a mobile GPU has less processing power than a desktop GPU, and we want better graphics for VR than today’s games… so we team the Tegra with a discrete GeForce GPU across a wireless connection, or even better, to a Tesla GPU in the cloud.

This allows a powerful GPU to compute the lighting information, which it then sends to the Tegra on your body to render final images. You get the benefit of reduced latency and power requirements while actually increasing image quality.

This allows a powerful GPU to compute the lighting information, which it then sends to the Tegra on your body to render final images. You get the benefit of reduced latency and power requirements while actually increasing image quality.

Reducing the Latency Baseline

Of course, you can’t push latency to less than the frame rate. If the display updates at 90 FPS, then it is impossible to have latency less than 11 ms in the worst case, because that’s how long the display waits between frames. So, how fast can we make the display?

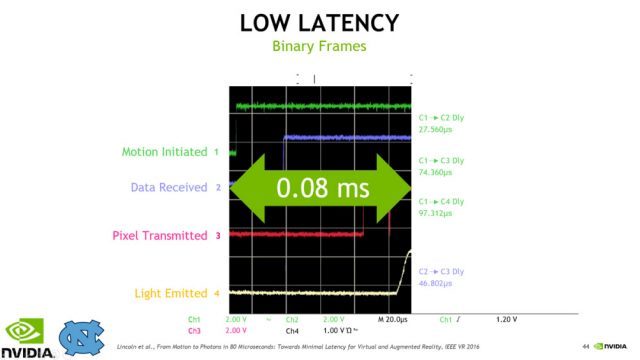

We collaborated with scientists at the University of North Carolina to build a display that runs at sixteen thousand binary frames per second. Here’s a graph from a digital oscilloscope showing how well this works for the crucial case of a head turning. When you turn your head, latency in the screen update causes motion sickness.

In the graph, time is on the horizontal access. When the top, green line jumps, that is the time at which the person wearing the display turned their head. The yellow line is when the display updated. It jumps up to show the new image only 0.08 ms later…that’s about 500 times better than the 20 ms you experience in the worst case on a commercial VR system today.

In the graph, time is on the horizontal access. When the top, green line jumps, that is the time at which the person wearing the display turned their head. The yellow line is when the display updated. It jumps up to show the new image only 0.08 ms later…that’s about 500 times better than the 20 ms you experience in the worst case on a commercial VR system today.

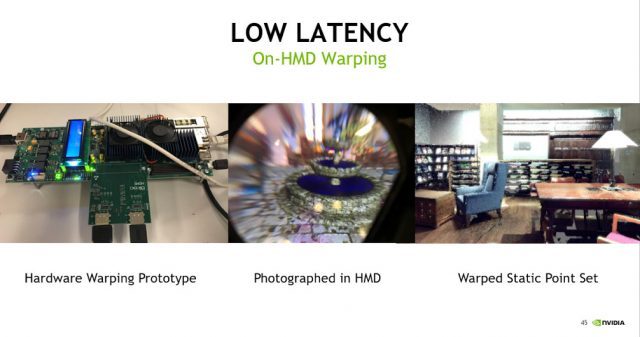

The renderer can’t run at 16,000 fps, so this kind of display works by Time Warping the most recent image to match the current head position. We speed that Time Warp process up by running it directly on the head-mounted display. Here’s an image of our custom on-head processor prototype for this:

Unlike regular Time Warp which distorts the 2D image or the more advanced Space Warp that uses 2D images with depth, our method works on a full 3D data set as well. The picture on the far right shows a case where we’ve warped a full 3D scene in real-time. In this system, the display itself can keep updating while you walk around the scene, even when temporarily disconnected from the renderer. This allows us to run the renderer at a low rate to save power or increase image quality, and to produce low-latency graphics even when wirelessly tethered across a slow network.

Unlike regular Time Warp which distorts the 2D image or the more advanced Space Warp that uses 2D images with depth, our method works on a full 3D data set as well. The picture on the far right shows a case where we’ve warped a full 3D scene in real-time. In this system, the display itself can keep updating while you walk around the scene, even when temporarily disconnected from the renderer. This allows us to run the renderer at a low rate to save power or increase image quality, and to produce low-latency graphics even when wirelessly tethered across a slow network.

The Complete System

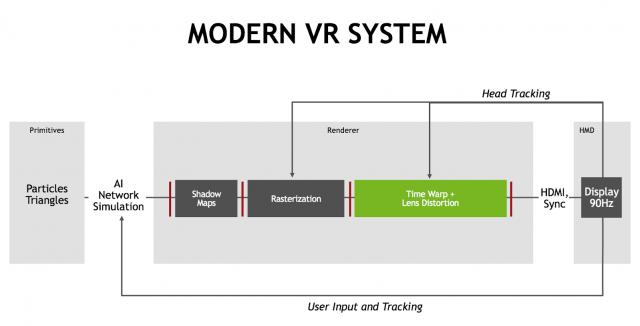

As a reminder, in Part 1 of this article we identified the rendering pipeline employed by today’s VR headsets:

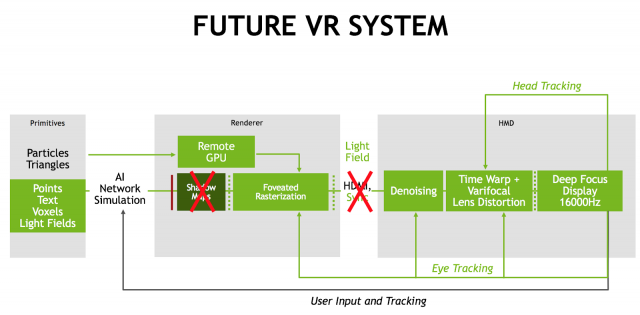

Putting together all of the techniques just described, we can sketch out not just individual innovations but a completely new vision for building a VR system. This vision removes almost all of the synchronization barriers. It spreads computation out into the cloud and right onto the head-mounted display. Latency is reduced by 50-100x and images have cinematic quality. There’s a 100x perceived increase in resolution, but you only pay for pixels where you’re looking. You can focus naturally, at multiple depths.

Putting together all of the techniques just described, we can sketch out not just individual innovations but a completely new vision for building a VR system. This vision removes almost all of the synchronization barriers. It spreads computation out into the cloud and right onto the head-mounted display. Latency is reduced by 50-100x and images have cinematic quality. There’s a 100x perceived increase in resolution, but you only pay for pixels where you’re looking. You can focus naturally, at multiple depths.

We’re blasting binary images out of the display so fast that they are indistinguishable from reality. The system has proper focus accommodation, a wide field of view, low weight, and low latency…making it comfortable and fashionable enough to use all day.

We’re blasting binary images out of the display so fast that they are indistinguishable from reality. The system has proper focus accommodation, a wide field of view, low weight, and low latency…making it comfortable and fashionable enough to use all day.

By breaking ground in the areas of computational displays, varifocal optics, foveated rendering, denoising, light fields, binary frames and others, NVIDIA Research is innovating for a new system for virtual experiences. As systems become more comfortable, affordable and powerful, this will become the new interface to computing for everyone.

All of the methods that I’ve described can be found in deep technical detail on our website.

I encourage everyone to experience the great, early-adopter modern VR systems available today. I also encourage you to join us in looking to the bold future of pervasive AR/VR/MR for everyone, and recognize that revolutionary change is coming through this technology.

The post Exclusive: How NVIDIA Research is Reinventing the Display Pipeline for the Future of VR, Part 2 appeared first on Road to VR.

Ream more: https://www.roadtovr.com/exclusive-nvidia-research-reinventing-display-pipeline-future-vr-part-2/

Sunsoft’s PSVR MOBA ‘Dark Eclipse’ to Launch in Spring 2018

Sunsoft, the Japanese publisher and developer, today announced their multiplayer online battle arena (MOBA) for PlayStation VR Dark Eclipse will be launching Spring 2018. If you’re heading to Sony’s annual PlayStation Experience next week you’ll be able to get your hands on a demo.

MOBAs are traditionally fast-paced affairs, as they rely on quick-twitch mouse clicks and a mind mindbogglingly fast ability to traverse the map. To that effect Sunsoft is trying to dial in on the right speed so players don’t get tired quickly, but also have the sort of MOBA experience they’re used to.

Sunsoft Senior Programmer Bill Hung explained a little more about the game in a PlayStation US blog post, saying that Dark Eclipse doesn’t require you to extend your arms to aim like in VR shooters, but rather tasks you with dropping the cursor like an object that your little army will follow, effectively slowing down gameplay for a more comfortable experience. According to Hung, the game is paced so that you can move “more casually” and allows a rest period between matches, so you can rest your body if needed.

The game will include tutorials, practice mode, player vs A.I. bots, and of course player vs player. There will be rewards as you gain more experience that you’ll collect regardless of solo, casual mode or rank mode.

Sunsoft says they’ll be over twenty characters available for the game at launch, including human-like characters called ‘Heroes’ and monster-like characters called ‘Dominators’.

The player will be able to take control of three characters (leaders). Sunsoft says it’s aiming to provide “around a thousand hours of gameplay,” although since it’s an online game, the gameplay could technically be endless. Matches are said to last between 10 to 30 minutes each.

Dark Eclipse will first launch with only 1v1 gameplay, but will at some point later include 2v2, and then later 3v3 matches. “We don’t want to jump into a situation where there are not enough players in-game at any given time, and we don’t want to have people waiting for a long time for a match,” says Hung.

To give it the future potential of becoming an eSport, Sunsoft is including a spectator mode, which will be released in an update after launch.

The post Sunsoft’s PSVR MOBA ‘Dark Eclipse’ to Launch in Spring 2018 appeared first on Road to VR.

Ream more: https://www.roadtovr.com/sunsofts-psvr-moba-dark-eclipse-arrive-spring-2018/

Highlight: Stand Out "Winner Winner Raptor Dinner"

Watch the whole video here: https://www.youtube.com/watch?v=dJBKPWDKaBg -- Watch live at https://www.twitch.tv/ukrifter

YET MORE PUBG in VR w. Greig | Stand Out VR +new update (Oculus Rift Gameplay)

Please hit that subscribe button it honestly makes my day! Want to buy me a beer? https://streamlabs.com/theukrifter **Voice Acting** - I currently offer FREE voice acting for VR projects. Got an opinion? Contact me on Twitter @UKRift, or on Facebook - https://www.facebook.com/ukrifter. Also, check out my Virtual Reality articles and opinions on http://www.ukrifter.com Hugely grateful if you could share this video with your friends & family via : twitter, facebook, reddit, etc. == Who the devil are you Sir? == Typically I play with VR on my weekends and schedule video posts through the week. I invest my own money in order to review, compare and contrast. I run this for the love of the technology, I am NEVER paid for my opinion, and as a result my opinions will remain unbiased, however, as I tend to praise in public and feedback negative experiences privately to developers, I rarely review a bad title. Not seen something reviewed here, maybe you have your answer. == Special Thanks == UKRifter Logo by Jo Baker Freelance Design - http://jobaker.co.uk/ == Techno Babble for the Geeks == HMD: HTC Vive, Oculus Rift DK1 / DK2 / CV1 CPU: i7 4790K GPU: Nvidia EVGA 1080 SC edition RAM: 16GB Storage: SSD / Hybid SSD Screen recorder: OBS Wheel: Logitech G27 Joystick: Thrustmaster T-Flight HOTAS X Mouse: RAT 7 == Legal == The thoughts and opinions expressed by UKRifter and other contributors are those of the individual contributors alone and do not necessarily reflect the views of their employers, sponsors, family members, pets or voices in their heads. == Audio Credits == UKRifter Jingle by Christopher Gray Licensed under Creative Commons: By Attribution 3.0 http://creativecommons.org/licenses/by/3.0/ Multistreaming with https://restream.io/

MORE PUBG MAYHEM w. Greig | Stand Out VR (Oculus Rift Gameplay)

Support the stream: https://streamlabs.com/theukrifter Please hit that subscribe button it honestly makes my day! Want to buy me a beer? Click here https://www.paypal.me/ukrifter **Voice Acting** - I currently offer FREE voice acting for VR projects. Got an opinion? Contact me on Twitter @UKRift, or on Facebook - https://www.facebook.com/ukrifter. Also, check out my Virtual Reality articles and opinions on http://www.ukrifter.com Hugely grateful if you could share this video with your friends & family via : twitter, facebook, reddit, etc. == Who the devil are you Sir? == Typically I play with VR on my weekends and schedule video posts through the week. I invest my own money in order to review, compare and contrast. I run this for the love of the technology, I am NEVER paid for my opinion, and as a result my opinions will remain unbiased, however, as I tend to praise in public and feedback negative experiences privately to developers, I rarely review a bad title. Not seen something reviewed here, maybe you have your answer. == Special Thanks == UKRifter Logo by Jo Baker Freelance Design - http://jobaker.co.uk/ == Techno Babble for the Geeks == HMD: HTC Vive, Oculus Rift DK1 / DK2 / CV1 CPU: i7 4790K GPU: Nvidia EVGA 1080 SC edition RAM: 16GB Storage: SSD / Hybid SSD Screen recorder: OBS Wheel: Logitech G27 Joystick: Thrustmaster T-Flight HOTAS X Mouse: RAT 7 == Legal == The thoughts and opinions expressed by UKRifter and other contributors are those of the individual contributors alone and do not necessarily reflect the views of their employers, sponsors, family members, pets or voices in their heads. == Audio Credits == UKRifter Jingle by Christopher Gray Licensed under Creative Commons: By Attribution 3.0 http://creativecommons.org/licenses/by/3.0/ Multistreaming with https://restream.io/

Google’s Daydream Impact Project Aims to Bolster Philanthropic Efforts With VR

Google’s newly announced Daydream Impact program is a philanthropy project that provides organizations, nonprofits, and advocates for change with resources, tools, and training to create immersive VR content to support their cause. Instead of just creating advertisements, organizations using the program can now immerse users into a situation, with the hope of generating a greater empathetic response in viewers than traditional media.

Google offers the example of the melting of polar ice caps; it’s one thing to read about it or see a video, but it’s thought to be a more meaningful experience to be beside or on top of the glacier as the enormous sheets of ice calve off and crash into the ocean. Of course most people would never get a chance to see something of this magnitude in person, but immersive 360 video through a VR headset could bring people closer to that experience.

The program has already been piloted by several organizations who have created their own videos available on YouTube in 360 view:

- The Rising Seas Project documents the normal high tide and extremely high tides during the winter and summer solstices (known as King Tides) in Los Angeles, CA, to show changes in coastline environments, and impacts of rising tides.

- Harmony Labs created several anti-bullying pieces to pilot in school’s, Stand-up is presented here

- Springbok Cares is integrating VR headset use into hospital environments as entertainment and escape for patients receiving cancer treatments. In a short video provided by Google from Springbok Cares, patients responded very positively after interacting with headsets during their cancer treatments.

- Eastern Congo Initiative displays the struggles of living in the Congo and the resilience of its people.

Google says that Daydream Impact is comprised of two main components:

- A free online training course called Coursera, available online to teach organizations how to create VR content. The course starts off by outlining basic hardware requirements and pre-production checklists. It provides tips, suggestions, and best practice recommendations from other content creators on how to obtain the best and most impactful 360 degree footage. Post-production work required to create the video as well as guidance on how to publish and promote videos are also covered in the training.

- Google is launching a loaner program to provide qualified applicants/projects access to equipment to produce and showcase their creations in VR. The equipment list includes: a Jump Camera, an Expeditions kit (including, a tablet [for a teacher], mobile phones, VR viewers, a router, chargers, and a storage case), a Google Daydream View headset, and a Daydream-ready phone.

Applications to the Daydream Impact program can be completed by organization leaders. Accepted applicants will have six months to capture and refine their work, and showcase to their stakeholders.

Dane Erickson, the Managing Director from Eastern Congo Initiative, expressed that as a non-profit they would not be able to afford the equipment necessary to create VR content without the help of the program. He has also expressed interest in bringing their content into a classroom setting to connect with and educate children on the work that they do in Congo.

Harmony Labs Impact Design Director, Mary Joyce, also discussed applying their content to school settings to hopefully facilitate positive behavior change in children, and encourage children to stand-up against school-based bullying.

Google stated that upcoming projects and case studies will be presented from World Wildlife Fund & Condition One, UNAIDS, the International Committee of the Red Cross, Starlight Children’s Foundation, Protect our Winters, and Novo Media in 2018.

The post Google’s Daydream Impact Project Aims to Bolster Philanthropic Efforts With VR appeared first on Road to VR.

Ream more: https://www.roadtovr.com/google-daydream-impact-project-bolster-philanthropic-efforts-with-vr/

Wednesday, November 29, 2017

TPCAST Review, Testing & Installation (Wireless VR Adapter for HTC Vive)

Here's our TPCAST review! Hope you enjoy. Subscribe to join our VR adventures! → https://www.youtube.com/c/caschary?sub_confirmation=1 Here is our review on the TPCAST Wireless Adapter for HTC Vive. In this video we go through: - What’s in the box - Quick Installation - Our play setup - What the wireless VR experience is like - The issues - Price - Final say: Should you buy the TPCAST? And are we going to buy it? More questions? Feel free to ask below! [Links] ► Video SweViver: https://youtu.be/ZzK3p0d7GM4 Explanation of ethernet cables at 9:52 ► Tutorial video of TPCast: https://www.youtube.com/watch?v=Hvum4WsWEbE ► TPCast user guide: https://docs.wixstatic.com/ugd/ebb63f_09b7e6467b26498d877b616ed9cb314a.pdf ► OpenTPCast: https://github.com/OpenTPCast/Docs [Complete recommended setup list] 1. Tripod or lightstand ($20) - http://amzn.to/2i24oxS 2. Ball head ($5) - http://amzn.to/2i2ANEK 3. Ethernet network card or USB to ethernet adapter ($20) - http://amzn.to/2jwI8wt 4. Extra battery pack ($32) - http://amzn.to/2Agfkm3 5. Extra Ethernet cable for the router ($6) - http://amzn.to/2jwxR3y 6. A license to use the VirtualHere USB Server for OpenTPCast (at least until the microphone is fixed) = $25 - https://github.com/OpenTPCast/Docs/blob/master/guides/UPGRADE.md ► Get TPCAST VR Wireless Adapter for HTC Vive here → https://www.tpcastvr.com/ More HTC Vive Gameplays & Casual Reviews → https://www.youtube.com/watch?v=RYl0nceTmYU&list=PL86GNGPnZ0siwDr9AoFLro8NF4CnAqSYx More Oculus Rift + Touch Gameplays & Casual Reviews → https://www.youtube.com/watch?v=DKXodZ6Oryg&list=PL86GNGPnZ0sg8QLhWQ9WSnXY4Vyzx-ghA ► Like what we do? → https://www.patreon.com/casandchary ► Twitter → https://twitter.com/CasandChary ► Instagram → https://www.instagram.com/casandchary ► Facebook → https://www.facebook.com/casandchary ► Discord → https://discord.gg/YH52W2k A special thanks to our Patreon SUPERHEROES for their amazing support ❤: - Daniel L. Much love, Cas and Chary VR #vr #tpcast #htcvive

Exclusive: How NVIDIA Research is Reinventing the Display Pipeline for the Future of VR, Part 1

Virtual experiences through virtual, augmented, and mixed reality are a new frontier for computer graphics. This frontier state is radically different from modern game and film graphics. For those, decades of production expertise and stable technology have already realized the potential of graphics on 2D screens. This article describes comprehensive new systems optimized for virtual experiences we’re inventing at NVIDIA.

Guest Article by Dr. Morgan McGuire

Dr. Morgan McGuire is a scientist on the new experiences in AR and VR research team at NVIDIA. He’s contributed to the Skylanders, Call of Duty, Marvel Ultimate Alliance, and Titan Quest game series published by Activision and THQ. Morgan is the coauthor of The Graphics Codex and Computer Graphics: Principles & Practice. He holds faculty positions at the University of Waterloo and Williams College.

NVIDIA Research sites span the globe, with our scientists collaborating closely with local universities. We cover a wide domain of applications, including self-driving cars, robotics, and game and film graphics.

Our innovation on virtual experiences includes technologies that you’ve probably heard a bit about already, such as foveated rendering, varifocal optics, holography, and light fields. This article details our recent work on those, but most importantly reveals our vision for how they’ll work together to transform every interaction with computing and reality.

Our innovation on virtual experiences includes technologies that you’ve probably heard a bit about already, such as foveated rendering, varifocal optics, holography, and light fields. This article details our recent work on those, but most importantly reveals our vision for how they’ll work together to transform every interaction with computing and reality.

NVIDIA works hard to ensure that each generation of our GPUs are the best in the world. Our role in the research division is thinking beyond that product cycle of steady evolutionary improvement, in order to look for revolutionary change and new applications. We’re working to take virtual reality from an early adopter concept to a revolution for all of computing.

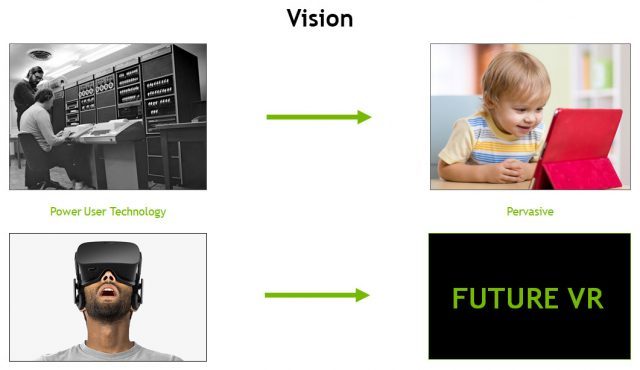

Research is Vision

Our vision is that VR will be the interface to all computing. It will replace cell phone displays, computer monitors and keyboards, televisions and remotes, and automobile dashboards. To keep terminology simple, we use VR as shorthand for supporting all virtual experiences, whether or not you can also see the real world through the display.

We’re targeting the interface to all computing because our mission at NVIDIA is to create transformative technology. Technology is truly transformative only when it is in everyday use. It has to become a seamless and mostly transparent part of our lives to have real impact. The most important technologies are the ones we take for granted.

If we’re thinking about all computing and pervasive interfaces, what about VR for games? Today, games are an important VR application for early adopter power users. We already support them through products and are releasing new VR features with each GPU architecture. NVIDIA obviously values games highly and is ensuring that they will be fantastic in VR. However, the true potential of VR technology goes far beyond games, because games are only one part of computing. So, we started with VR games but that technology is now spreading with the scope of VR to work, social, fitness, healthcare, travel, science, education, and all other tasks for which computing now plays a role.

NVIDIA is in a unique position to contribute to the VR revolution. We’ve already transformed consumer computing once before having introduced the modern GPU in 1999, and with it high-performance computing for consumer applications. Today, not only your computer, but also your tablet, smartphone, automobile, and television now have GPUs in them. They provide a level of performance that once would have been considered a supercomputer only available to power users. As a result, we all enjoy a new level of productivity, convenience, and entertainment. Now we’re all power users, thanks to invisible and pervasive GPUs in our devices.

For VR to become a seamless part of our lives, the VR systems must become more comfortable, easy to use, affordable, and powerful. We’re inventing new headset technology that will replace modern VR’s bulky headsets with thin glasses driven by lasers and holograms. They’ll be as widespread as tablets, phones, and laptops, and even easier to operate. They’ll switch between AR/VR/MR modes instantly. And they’ll be powered by new GPUs and graphics software that will be almost unrecognizably different from today’s technology.

For VR to become a seamless part of our lives, the VR systems must become more comfortable, easy to use, affordable, and powerful. We’re inventing new headset technology that will replace modern VR’s bulky headsets with thin glasses driven by lasers and holograms. They’ll be as widespread as tablets, phones, and laptops, and even easier to operate. They’ll switch between AR/VR/MR modes instantly. And they’ll be powered by new GPUs and graphics software that will be almost unrecognizably different from today’s technology.

All of this innovation points to a new way of interacting with computers, and this will require not just a new devices or software but an entirely new system for VR. At NVIDIA, we’re inventing that system with cutting-edge tools, sensors, physics, AI, processors, algorithms, data structures, and displays.

Understanding the Pipeline

NVIDIA Research is very open about what we’re working on and sharing our results through scientific publications and open source code. In Part 2 of this article, I’m going to present a technical overview of some of our recent inventions. But first, to put them and our vision for future AR/VR systems in context, let’s examine how current film, game, and modern VR systems work.

Film Graphics Systems

Hollywood-blockbuster action films contain a mixture of footage of real objects and computer generated imagery (CGI) to create amazing visual effects. The CGI is so good now that Hollywood can make scenes that are entirely computer generated. During the beautifully choreographed introduction to Marvel’s Deadpool (2016), every object in the scene is rendered by a computer instead of filmed. Not just the explosions and bullets, but the buildings, vehicles, and people.

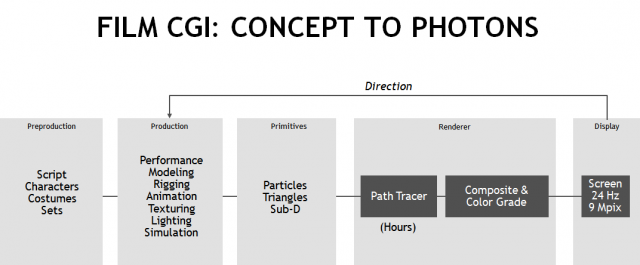

From a technical perspective, the film system for creating these images with high visual fidelity can be described by the following diagram:

From a technical perspective, the film system for creating these images with high visual fidelity can be described by the following diagram:

The diagram has many parts, from the authoring stages on the left, through the modeling primitives of particles, triangles, and curved subdivision surfaces, to the renderer. The renderer uses an algorithm called ‘path tracing’ that photo-realistically simulates light in the virtual scene.

The diagram has many parts, from the authoring stages on the left, through the modeling primitives of particles, triangles, and curved subdivision surfaces, to the renderer. The renderer uses an algorithm called ‘path tracing’ that photo-realistically simulates light in the virtual scene.

The rendering is also followed by manual post-processing of the 2D images for color and compositing. The whole process loops, as directors, editors, and artists iterate to modify the content based on visual feedback before it is shown to audiences. The image quality of film is our goal for VR realism.

Game Systems

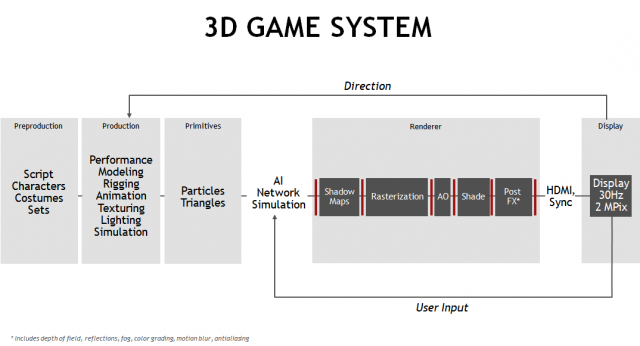

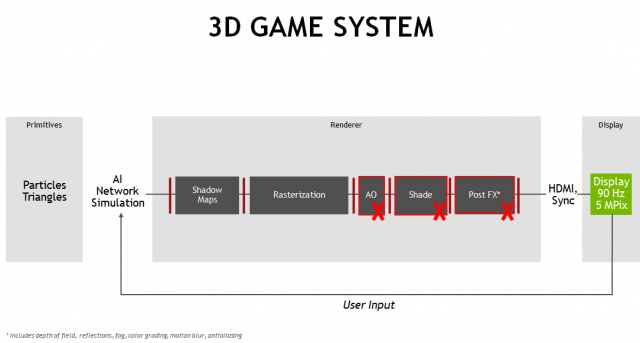

The film graphics system evolved into a similar system for 3D games. Games represent our target for VR interaction speed and flexibility, even for non-entertainment applications. The game graphics system looks like this diagram:

I’m specifically showing a deferred shading pipeline here. That’s what most PC games use because it delivers the highest image quality and throughput.

I’m specifically showing a deferred shading pipeline here. That’s what most PC games use because it delivers the highest image quality and throughput.

Like film, it begins with the authoring process and has the big art direction loop. Games add a crucial interaction loop for the player. When the player sees something on-screen, they react with a button press. That input then feeds into a later frame in the pipeline of graphics processing. This process introduces ‘latency’, which is the time it takes to update frames with new user input taken into account. For an action title to feel responsive, latency needs to be under 150ms in a traditional video game, so keeping it reasonably low is a challenge.

Unfortunately, there are many factors that can increase latency. For instance, games use a ‘rasterization’-based rendering algorithm instead of path tracing. The deferred-shading rasterization pipeline has a lot of stages, and each stage adds some latency. As with film, games also have a large 2D post-processing component, which is labelled ‘PostFX’ in the multi-stage pipeline referenced above. Like an assembly line, that long pipeline increases throughput and allows smooth framerates and high resolutions, but the increased complexity adds latency.

If you only look at the output, pixels are coming out of the assembly line quickly, which is why PC games have high frame rates. The catch is that the pixels spend a long time in the pipeline because it has so many stages. The red vertical lines in the diagram represent barrier synchronization points. They amplify the latency of the stages because at a barrier, the first pixel of the next stage can’t be processed until the last pixel of the previous stage is complete.

The game pipeline can deliver amazing visual experiences. With careful art direction, they approach film CGI or even live-action film quality on a top of the line GPU. For example, look at the video game Star Wars: Battlefront II (2017).

Still, the best frames from a Star Wars video game will be much more static than those from a Star Wars movie. That’s because game visual effects must be tuned for performance. This means that the lighting and geometry can’t change in the epic ways we see on the big screen. You’re probably familiar with relatively static gameplay environments that only switch to big set-piece explosions during cut scenes.

Still, the best frames from a Star Wars video game will be much more static than those from a Star Wars movie. That’s because game visual effects must be tuned for performance. This means that the lighting and geometry can’t change in the epic ways we see on the big screen. You’re probably familiar with relatively static gameplay environments that only switch to big set-piece explosions during cut scenes.

Modern Virtual Reality Systems

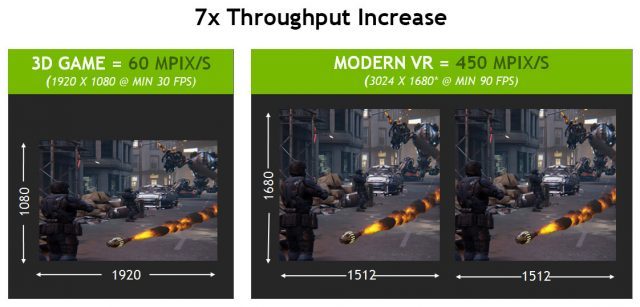

Now let’s see how film and games differ from modern VR. When developers migrate their game engines to VR, the first challenge they hit is the specification increase. There’s a jump in raw graphics power from 60 million pixels per second (MPix/s) in a game to 450 MPix/s for VR. And that’s just the beginning… these demands will quadruple that in the next year.

450 Mpix/second on an Oculus Rift or HTC Vive today is almost a seven times increase in the number of pixels per second compared to 1080p gaming at 30 FPS. This is a throughput increase because it changes the rate at which pixels move through the graphics system. That’s big, but the performance challenge is even greater. Recall how game interaction latency was around 100-150ms between a player input and pixels changing on the screen for a traditional game. For VR, we need not only a seven times throughput increase, but also a seven times reduction in the latency at the same time. How do today’s VR developers accomplish this? Let’s look at latency first.

450 Mpix/second on an Oculus Rift or HTC Vive today is almost a seven times increase in the number of pixels per second compared to 1080p gaming at 30 FPS. This is a throughput increase because it changes the rate at which pixels move through the graphics system. That’s big, but the performance challenge is even greater. Recall how game interaction latency was around 100-150ms between a player input and pixels changing on the screen for a traditional game. For VR, we need not only a seven times throughput increase, but also a seven times reduction in the latency at the same time. How do today’s VR developers accomplish this? Let’s look at latency first.

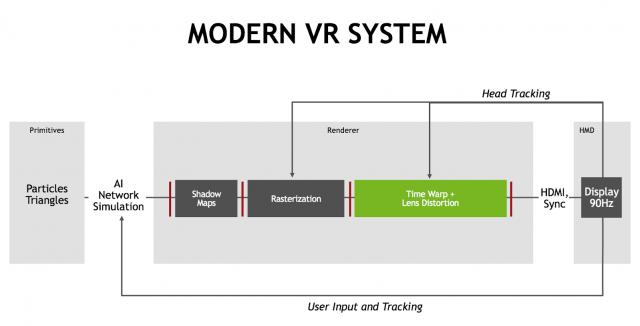

In the diagram below, latency is the time it takes data to move from the left to the right side of the system. More stages in the system give better throughput because they can work in parallel, but they also make the pipeline longer, so latency gets worse. To reduce latency, you need to eliminate boxes and red lines.

As you might expect, to reduce latency developers remove as many stages as they can, as shown in the modified diagram above. That means switching back to a ‘forward’ rendering pipeline where everything is done in one 3D pass over the scene instead of multiple 2D shading and PostFX passes. This reduces throughput, which is then conserved by significantly lowering image quality. Unfortunately, it still doesn’t give quite enough latency reduction.

As you might expect, to reduce latency developers remove as many stages as they can, as shown in the modified diagram above. That means switching back to a ‘forward’ rendering pipeline where everything is done in one 3D pass over the scene instead of multiple 2D shading and PostFX passes. This reduces throughput, which is then conserved by significantly lowering image quality. Unfortunately, it still doesn’t give quite enough latency reduction.

The key technology that helped close the latency gap in modern VR is called Time Warp. Under Time Warp, images shown on screen can be updated without a full trip through the graphics pipeline. Instead, the head tracking data are routed to a GPU stage that appears after rendering is complete. Because this stage is ‘closer’ to the display, it can warp the already-rendered image to match the latest head-tracked data, without taking a trip through the entire rendering pipeline. With some predictive techniques, this brings the perceived latency down from about 50ms to zero in the best case.

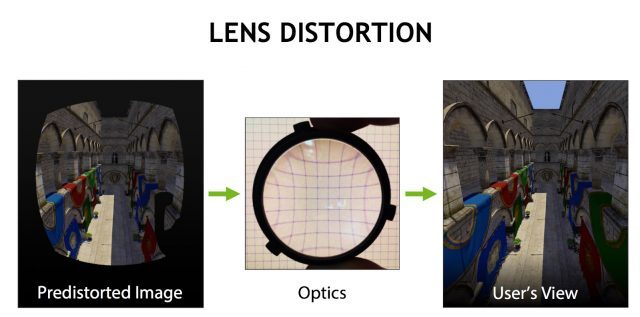

Another key enabling idea for modern VR hardware is Lens Distortion. A good camera’s optics contain at least five high quality glass lenses. Unfortunately, that’s heavy, large, and expensive, and you can’t strap the equivalent of two SLR cameras to your head.

This is why many head-mounted displays use a single inexpensive plastic lens per eye. These lenses are light and small, but low quality. To correct for the distortion and chromatic aberration from a simple lens, shaders pre-distort the images by the opposite amounts.

This is why many head-mounted displays use a single inexpensive plastic lens per eye. These lenses are light and small, but low quality. To correct for the distortion and chromatic aberration from a simple lens, shaders pre-distort the images by the opposite amounts.

NVIDIA GPU hardware and our VRWorks software accelerate the modern VR pipeline. The GeForce GTX 1080 and other Pascal architecture GPUs use a new feature called Simultaneous Multiprojection to render multiple views with increased throughput and reduced latency. This feature provides single-pass stereo so that both eyes render at the same time, along with lens-matched shading, which renders directly into the predistorted image and gives better performance and more sharpness. The GDDR5X memory in the 1080 provides 1.7x the bandwidth of the previous generation and hardware audio and physics help create a more accurate virtual world to increase immersion.

Reduced pipeline stages, Time Warp, Lens Distortion, and a powerful PC GPU comprise the Modern VR system.

Reduced pipeline stages, Time Warp, Lens Distortion, and a powerful PC GPU comprise the Modern VR system.

– – — – –

Now that we’ve established how film, games, and VR graphics work, stay tuned for Part 2 of this article where we’ll explore the limits of human visual perception and methods we’re exploring to get closer to them in VR systems of the future.

The post Exclusive: How NVIDIA Research is Reinventing the Display Pipeline for the Future of VR, Part 1 appeared first on Road to VR.

Ream more: https://www.roadtovr.com/exclusive-how-nvidia-research-is-reinventing-the-display-pipeline-for-the-future-of-vr-part-1/

HTC Invests in 26 More AR/VR Startups in Vive X Accelerator

HTC today announced its third batch of AR/VR startups backed by the company’s Vive X accelerator program. A total of 26 new companies from across the world have been selected to participate in the program with the goal of “building and advancing the global VR/AR ecosystem.”

Started last year, Vive X is a $100 million fund for AR/VR companies that, according to HTC, provides “unparalleled access to expertise, resources, planning and an extensive network throughout the AR/VR industry.” The accelerator has already backed 66 companies over the course of its two batches.

Here’s the new batch, connecting with companies across Vive X’s operating territories including San Francisco, Beijing, Shanghai, Taipei, and a newly announced fifth location in Tel Aviv:

San Francisco

- Apelab seeks to democratize content creation for VR/AR. Its software toolkit Spatial Stories allows developers, designers or anyone without coding knowledge to build fully interactive XR content quickly and efficiently within an immersive environment, then export it to various platforms.

- CALA offers a full-stack software solution that empowers fashion designers to turn ideas into garments faster – from first sketch to production and everything in between. Its 3D scanning technology allows consumers to easily take body measurements with smartphone photos and AR technology, then receive their orders with perfect fit.

- Cloudgate Studio is an acclaimed game development studio behind hit VR titles Brookhaven Experiment and Island 359. A brilliant team of industry veterans, Cloudgate is on a strategic path to build game tech modules that will culminate in the first hit title for VR eSports. Virtual Self as an example allows users to see their body in VR and stream their gameplay without expensive setups.

- eLoupes provides a real-time surgical imaging system for the operating room. Combining light field rendering and head mounted displays, hospitals can bring surgeons a solution that is superior to traditional imaging systems like microscopes while saving costs and improving patient outcomes.

- Nanome seeks to democratize science and engineering using VR and Blockchain technology. Today’s legacy systems are outdated and create enormous inefficiency in the innovation process. With an intuitive and distributed platform to interact with scientific data, Nanome will help accelerate scientific innovation like never before.

- Neurable develops brain-computer interface for VR control. The result of innovations in neuroscience and machine learning, Neurable interprets electroencephalography (EEG) signals for real-time interaction input. Think, “mind-controlled” experiences.

- Quantum Capture is building the behavioral engine that will power the world’s AI-assisted virtual agents. Combining photo-real 3D characters, procedural animation systems and support for cognitive AI, Quantum Capture aims to equip developers with the necessary tools to create amazing, virtual human- based applications, while substantially reducing costs and compressing production timelines.

- QuarkVR is the next-generation compression and streaming technology for untethered VR and AR experiences. It is a hardware agnostic software solution that supports 4K per eye resolution and is capable of streaming to a dozen simultaneous users in the same environment with minimal latency.

Beijing

- Future Tech is a Chengdu, China- based studio that creates quality games and VR content for users around the world. The team is founded by industry veterans with deep experience from global content powerhouses like Ubisoft, Gameloft, PDE, and Virtuos. Their current project “Mars Alive” has won numerous industry awards globally.

- Genhaosan (GHS) is the first-ever available VR entertainment solution for karaoke. The GHS VRK systems installed in karaoke rooms allow users to enjoy immersive experiences like singing like a rock star on stage, interacting with their audience and sharing their performance on social media.

- JuDaoEdu is dedicated to the research and development of VR labs for K-12 students. The product is applicable for Physics, Chemistry, Biology and Science education and the company’s vision is to provide students with authentic and safe VR lab environment.

- Lenqiy is a leading VR content developer providing innovation and creativity education to teenagers. Their current product portfolio includes science, engineering, technology, art and mathematics VR tools. By bringing creativity into product design, Lenqiy wants to make learning an enjoyable journey of exploration for teenagers.

- PanguVR is an AI-driven VR technology company with a focus on advanced computer vision. Its core engine, powered and refined by deep learning and processing thousands of terabytes of data, allows users to create 6DoF VR environments in minutes simply by uploading 3D assets or even 2D pictures. The process is fast and produces stunning quality output at only a fraction of the cost associated with manual process.

- Pillow’s Willow VR Studios creates fairytale games for both 3DoF and 6DoF VR, excelling with its high-quality visuals while maintaining maximum performance. Their non-violent casual VR games featuring curious, likeable characters, are fun, easy to play and suitable for all ages.

- Yue Cheng Tech aims to become the Netflix in VR. The company has selected more than 300 top-quality VR contents from 50+ partners in 15 countries, introduced them to China, and created world’s first professional VR cinema. YCT also produce world-class contents with the world’s top talents in visual effects and movie industry.

Shenzhen

- Antilatency specializes in positional tracking solutions for VR/AR that enable multiplayer VR experiences within the tracking area without scale limits, using mobile or tethered VR headsets.

- Configreality understands deeply how human spatial perception works across physical and virtual space simultaneously. With its proprietary spatial compression algorithm, users can feel as if they are walking in an infinitely large space even when the physical space is limited.

- Super Node is a visual intelligence company with full-stack solutions that enables machines to learn its surrounding environments. The solution brings low-cost, high-accuracy obstacle avoidance, 6DoF tracking and SLAM (Simultaneous Localization and Mapping) capabilities to VR, AR and robotics.

- VRWaibao offers a multiplayer collaboration platform in VR, while also creating a wide range of enterprise VR applications for customers in banking, manufacturing, real estate, healthcare, military and more.

- Wewod is focused on delivering high-quality, location-based entertainment and educational VR content. The team has deep experience delivering 3D production work for renowned clients like Disney, Bandai Namco, and Nintendo.

Taipei

- COVER provides a virtual livestreaming platform which users can perform with their avatars for audience to watch via mobile devices. It also offers live shows featuring their own virtual celebrities.

- Looxid Labs has developed an emotional analytics platform optimized for VR using bio-sensors that measure users’ eyes and brain activities. Its machine learning algorithm is capable of accurately analyzing users’ emotional state, providing valuable data that can be leveraged to make a real impact in users’ VR experiences.

- Red Pill Lab applies deep learning algorithms to optimize the workflow of real- time character animation. Its voice-to-facial engine and full body IK-solver work together to add a new level of realism to virtual characters in VR games.

- VRCollab takes BIM (Building Information Modelling) to the next level, enabling architects, engineers, planners and consultants to collaborate seamlessly on construction projects. It is a software solution that instantly converts BIM models for use in design reviews, building requirement approval and construction coordination, as well as automated document generation.

Tel Aviv

- Astral Vision turns existing amusement park rides into VR attractions, offering a compelling and refreshing customer experience without engaging in capital-intensive upgrades.

- REMMERSIVE is founded by award-winning technologists and race driving champions, and creates a new breed of fully immersive driving simulators with technology, providing a far more engaging and true-to-life experience.

The post HTC Invests in 26 More AR/VR Startups in Vive X Accelerator appeared first on Road to VR.

Ream more: https://www.roadtovr.com/htc-invests-26-arvr-startups-vive-x-accelerator/

Madcap Puzzle Adventure ‘Elevator … to the Moon!’ Comes to SteamVR Headsets

Elevator … to the Moon! (2017) has been available for Oculus Rift and Gear VR since its release in October. Now, the stylish low-poly puzzler is available on SteamVR headsets via Steam, including HTC Vive, Windows VR headsets and Oculus Rift.

You’ve been placed in the space boots of an astronaut tasked with fulfilling the ridiculous demands of President of the World, the very Schwarzenegger-esque Doug-Slater Roccmeier. President Roccmeier needs you to not only build his massively unstable elevator but follow his orders to the letter, no matter how silly and pointless they may seem. If you choose to disobey, you can cause what the Roccat Games Studio calls “hilarious havoc.”

The game includes both classic point-and-click controls and full motion controls so you can physically rummage around in drawers, press buttons, etc.

The Steam version of the game can be had at a temporary 20% discount, knocking it from $10 to $8. Check out the trailer below to get a better idea of what madness is in store.

The post Madcap Puzzle Adventure ‘Elevator … to the Moon!’ Comes to SteamVR Headsets appeared first on Road to VR.

Ream more: https://www.roadtovr.com/elevator-moon-comes-steam-vr-vive-rift/

‘Doom VFR’ is Coming with Classic ‘Doom’ Maps, Gameplay Video Here

Doom VRF, id Software’s upcoming standalone VR game, is nearly here, coming December 1st for PSVR and HTC Vive. It looks like the studio is turning up the nostalgia to 11 with the latest revelation that the game will also feature classic maps lifted from the original Doom (1993).

Confirmed by IGN, both ‘Toxic Refinery’ and ‘Nuclear Plant’ will be available to play at launch.

According to IGN, the maps are unlocked by playing through the main campaign, although it’s currently unclear how this will be achieved, be it an Easter egg hunt like the 2016 Doom or a simple unlocking once you’ve completed the game.

While the 4-minute gameplay video below features teleportation, free movement is also an option throughout the entire game, which the studio calls ‘dash’ movement. That ought to kindle the nostalgia a little better than teleporting around from spot to spot. And yes – just like the original, the enemies are in all the right spots waiting for you to breeze on by, albeit the new baddies in their higher-resolution 3D glory.

Doom VFR is Bethesda’s second big IP revival. Unlike Skyrim VR for PSVR, which is a VR port of the 2011 title, the new VR Doom game is a built from the ground-up for VR headsets, and features a unique story line.

It not only features support for motion controllers, but in the case of PSVR it also supports DualShock 4 controllers and PS Aim. Check out our hands-on with Doom VFR’s Aim support here.

The post ‘Doom VFR’ is Coming with Classic ‘Doom’ Maps, Gameplay Video Here appeared first on Road to VR.

Ream more: https://www.roadtovr.com/doom-vfr-coming-classic-doom-maps-gameplay-video/

‘Light Strike Array’ Fuses PVP Combat with Novel Locomotion

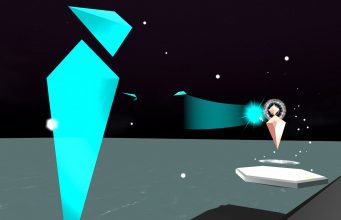

For some games, VR locomotion is simply ends to a mean; getting you from point A to point B in an efficient manner so other game mechanics can take the spotlight. Not so in the upcoming PvP battle arena Light Strike Array, which integrates a novel form of teleportation into the very heart of gameplay.

As a crystalline being called a Shard, you’re given a torch and a weapon to combat other players. While it’s easy to explain the weapons (slash, shoot, smash enemies), the torch is another story. Throwing the torch teleports you to a new ‘Cell’, or landing pad. Sounds simple, right? Well, there’s a catch (literally). You have to catch the torch as soon as you land, otherwise you’re left defenseless, effectively leaving you with more to worry about than just the enemy’s arrows or blades.

Chris Woytowitz, lead developer at indie studio Unwieldy Systems, says he chose the unique brand of teleportation to not only mitigate the risk of motion sickness, but to act as a natural skill cost to long-range movement, incentivising players to mix teleportation and local movement instead of simply overrelying on teleportation while standing in the same spot in their room. It’s easy to imagine dodging arrows, tossing a torch to find cover and nearly missing it by a fraction to a disastrous effect—something that otherwise wouldn’t occur if you were given free rein to quickly teleport wherever you like.

As for the PvP combat, here’s a quick breakdown:

Two teams of up to three players per team fight to destroy each other’s ‘Heart’, which is shielded by a powerful force field called the ‘Corona’. To penetrate the Corona, you need a create special tools from a gatherable resource ‘Salt’. Salt can be obtained by collecting halite crystals, which can be transformed into pillars of Salt, giving you a number of tools—but not the ultimate tool. The ultimate tool, called the Censer, lets your team bypass the enemy Corona and shatter their Heart. Victory.

Weapons include Light Bombs, bows, swords, and spells; but also the tools you create using Salt.

Light Strike Array is headed to Steam Early Access next month, with support for SteamVR-compatible headsets. The game’s full release is slated to arrive sometime in 2018.

Woytowitz says the full version will be “a generally more clean, rich, and balanced experience. I plan on adding a handful of new tools and weapons to afford more playstyles, as well as secondary map objectives (for example, capturable buildings.) The style and aesthetic will be refined over time and are subject to change (within the soft parameters of “crystalpunk.”) I’ll be improving sound, voice acting, and training levels over time, as well. I hope to continue to support LSA and grow the community through Early Access, release, and the future!”

Check out the Early Access trailer below.

The post ‘Light Strike Array’ Fuses PVP Combat with Novel Locomotion appeared first on Road to VR.

Ream more: https://www.roadtovr.com/light-strike-array-fuses-pvp-combat-novel-locomotion/

Tuesday, November 28, 2017

Highlight: UKRifter acts like a baby when someone steals his gun

Everything is so good natured and friendly in VR, it's hard to get upset with dirty stinking cheats like this guy. -- Watch live at https://www.twitch.tv/ukrifter

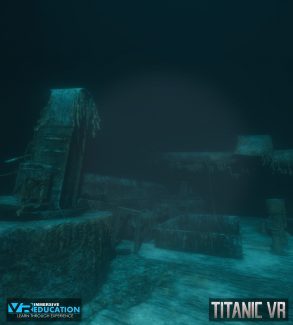

‘Titanic VR’ Dives Deep into the History of the Fateful Sinking in Educational VR Experience

Getting kids excited about learning isn’t easy, especially when the little glowing rectangle in their pockets provides endless distractions. Enter Titanic VR, a new educational experience by Immersive VR Education that brings the sunken wreckage of the RMS Titanic to life once more.

Titanic VR was built from the ground-up for VR and was made using what the studio says were “comprehensive maps to create a realistic 3D model of the wreck site as well as motion capture, face-scanning technology and professional voice actors to immerse users in the story.” The studio worked with the BBC to obtain real life testimony from the survivors themselves, creating an even deeper opportunity for learning about the disaster.

For now, the experience features a storyline set in the near future that takes you to the wreckage as well as a sandbox mode for free exploration. There’s also bonus missions such as rescuing a lost Remotely Operated Vehicle (ROV), creating a photo mosaic, placing research equipment, and cleaning and preserving recovered artifacts. Since it’s currently in Early Access, there’s still more to come, including an animated 1912 experience being released later this year where players will witness historically accurate events through the eyes of a survivor.

Titanic VR was the result of a successful Kickstarter campaign created by studio founder David Whelan. Taking in over €57,000, the studio has built the educational experience for all major VR platforms including HTC Vive, Oculus Rift, and PlayStation VR. Support for Windows VR headsets is slated to come later this year.

“Increasingly, educators are realising that simulated learning can make a real difference in learning outcomes. With the improved availability of affordable hardware led by major internationals such as Apple, Samsung and Google, the VR/AR market will inevitably gain traction and eventually become an everyday technology,” said Whelan. “We are leading this revolution, utilising leading edge VR/AR technologies to enhance digital learning through our fully immersive social learning platform, Engage and our proprietary experiences.”

The team is also known for Apollo 11 VR, a similar dive into history not only lets you blast off with a Saturn V rocket and let you land and walk on the Moon, but also injects actual audio from the mission into the experience. According to Whelan, Apollo 11 VR has sold over 80,000 copies so far. Immersive VR Education hopes to replicate that success with the new Titanic VR experience.

You can download Titanic VR herePSN on Steam Early Access , which includes support for Vive and Rift. The PSVR version hasn’t appeared to hit yet, but we’ll update once it does.

The post ‘Titanic VR’ Dives Deep into the History of the Fateful Sinking in Educational VR Experience appeared first on Road to VR.

Ream more: https://www.roadtovr.com/titanic-vr-dives-deep-history-fateful-sinking-educational-vr-experience/

At $600K, Tundra Tracker Smashes Kickstarter Goal in Less Than 24 Hours

Tundra Tracker, the SteamVR Tracking tracker in development by Tundra Labs, has well exceeded its $250,000 Kickstarter goal in less than 2...

-

DOOM 3 VR Edition launches today on PlayStation consoles, bringing the 2004 classic horror-shooter to PSVR for the first time. We haven...

-

Tundra Tracker, the SteamVR Tracking tracker in development by Tundra Labs, has well exceeded its $250,000 Kickstarter goal in less than 2...

-

InXile Entertainment, the studio behind The Mage’s Tale (2018) and The Bard’s Tale series, today announced Frostpoint VR: Proving Ground...