Today during Magic Leap’s developer livestream the company offered the first details on the compute hardware that’s built into the device, confirming that an NVIDIA CPU/GPU module, and also showed a brief developer sample demonstrating world meshing and gesture input.

Speaking during a developer livestream today, Magic Leap’s Alan Kimball, part of the company’s developer relations team, offered the first details on what developers can expect in terms of the power and performance on the AR headset.

The headset connects to a belt-clipped module which contains the battery and compute hardware. Inside the box is NVIDIA’s TX2, a powerful module which contains three processors: a quad-core ARM A57 CPU, a dual-core Denver 2 CPU, and an NVIDIA Pascal-based GPU with 256 CUDA cores.

The hardware resources are divided between the system and the developer. The headset’s underlying ‘Lumin OS’ is relegated to two of the A57 cores and one of the Denver 2 cores, leaving the other half of the cores for developers to use without fear that system processes will interrupt content. Kimball said that the Unity and Unreal engine integrations for Magic Leap do much of the core balancing optimizations (between the available A57 cores and Denver 2 core) for developers already.

Magic Leap’s Chief Game Designer, Graeme Devine, said of the device, “It’s a console,” referring to the way that a portion of hardware resources are exclusively reserved for developers, similar to game consoles, as opposed to a platform like the PC where unrelated processes and background apps can easily impact the performance of active applications.

It isn’t clear at this time how the GPU resources will be distributed between the system and developer content, but Kimball said that the system supports a wide variety of graphics APIs including OpenGL, OpenGL ES 3.1, and Vulkan.

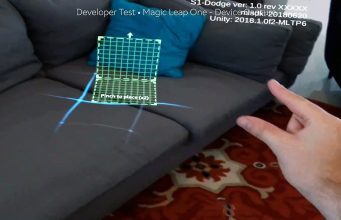

The company also offered a brief glimpse of a new developer sample application called ‘Dodge’. While the footage wasn’t shot through the headset’s lens, it was recorded with the device’s capture function, which records the real world from the headset’s camera and then composites the digital content into the view in real-time. So what we’re seeing is the same graphics and interactions that you’d see through the headset, but the field of view and any artifacts imposed by the display/lenses aren’t represented.

In the footage above we can see how the device uses a pinching gesture, similar to HoloLens, to act as a button press. At one point the hand is used to smack a boulder out of the way, showing that the hand-tracking system can do more than just detect gestures. The headset also has a 6DOF controller, but it isn’t shown in this demo.

The blue grid in the demo shows the headset’s perception of the user’s room, and Magic Leap said during the session that this geometry mesh is updated continuously.

During the livestream the company also affirmed that they are on track to ship the Magic Leap One this Summer (which ends September 22nd).

The post Magic Leap Reveals Developer Demo, Confirms NVIDIA TX2 Hardware appeared first on Road to VR.

Ream more: https://www.roadtovr.com/magic-leap-ar-developer-demo-nvidia-tx2-cpu-gpu-hardware/

No comments:

Post a Comment